Why Do Language Models Hallucinate?

Overview

Recently, OpenAI has just released the paper “Why Language Models Hallucinate” by Adam Tauman Kalai, Ofir Nachum, Santosh Vempala, and Edwin Zhang (2025).

Abstraction: Like students facing hard exam questions, large language models sometimes guess when uncertain, producing plausible yet incorrect statements instead of admitting uncertainty. Such “hallucinations” persist even in state-of-the-art systems and undermine trust. We argue that language models hallucinate because the training and evaluation procedures reward guessing over acknowledging uncertainty, and we analyze the statistical causes of hallucinations in the modern training pipeline. Hallucinations need not be mysterious—they originate simply as errors in binary classification. If incorrect statements cannot be distinguished from facts, then hallucinations in pretrained language models will arise through natural statistical pressures. We then argue that hallucinations persist due to the way most evaluations are graded—language models are optimized to be good test-takers, and guessing when uncertain improves test performance. This “epidemic” of penalizing uncertain responses can only be addressed through a socio-technical mitigation: modifying the scoring of existing benchmarks that are misaligned but dominate leaderboards, rather than introducing additional hallucination evaluations. This change may steer the field toward more trustworthy AI systems.

The paper takes a deep dive into one of the most persistent problems in large language models (LLMs): hallucinations, where models generate confident but false statements. Unlike many studies that only describe symptoms or propose patchwork fixes, this work goes further to explain:

- Why hallucinations naturally arise during pretraining, even with perfectly clean data.

- Why they persist after alignment and fine-tuning, largely due to how benchmarks reward “guessing” over honest uncertainty.

- How we can mitigate them by reforming evaluation methods, introducing confidence thresholds, and encouraging models to output “I don’t know” when appropriate.

In short, the authors argue that hallucinations are not mysterious flaws, but predictable statistical errors reinforced by current evaluation practices — and that solving them requires changing how we test and reward AI models, not just tweaking training pipelines.

1. What is hallucination?

Hallucinations are plausible but false statements generated by language models. They can show up in surprising ways, even for seemingly straightforward questions. For example, when we asked a widely used chatbot for the title of the PhD dissertation by Adam Tauman Kalai (an author of the mentioned paper), it confidently produced three different answers—none of them correct. When we asked for his birthday, it gave three different dates, likewise all wrong.

2. Findings

Think about it like a multiple-choice test. If you do not know the answer but take a wild guess, you might get lucky and be right. Leaving it blank guarantees a zero. In the same way, when models are graded only on accuracy, the percentage of questions they get exactly right, they are encouraged to guess rather than say “I don’t know.”

As another example, suppose a language model is asked for someone’s birthday but doesn’t know. If it guesses “September 10,” it has a 1-in-365 chance of being right. Saying “I don’t know” guarantees zero points. Over thousands of test questions, the guessing model ends up looking better on scoreboards than a careful model that admits uncertainty.

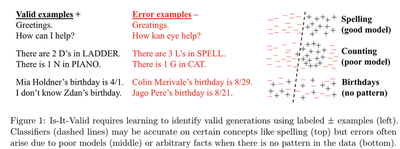

2.1. Pretraining inevitably introduces errors

Pretraining is essentially a density estimation problem: the model tries to approximate the probability distribution of language. The authors show that this is closely related to a binary classification problem: deciding whether a given output is valid or invalid. From statistical learning theory, classification always has a non-zero error rate → meaning LLMs cannot avoid mistakes. Even with perfect, error-free data, hallucinations emerge because:

- Some facts are rare or unique (e.g., a birthday mentioned only once).

- When no pattern exists in data, the model faces epistemic uncertainty (knowledge that is simply missing).

- This explains why LLMs do fine on common facts (like “Einstein’s birthday”) but hallucinate rare ones.

Key concept: Singleton rate

- The fraction of facts that appear only once in training data.

- The higher the singleton rate, the higher the expected hallucination rate.

2.2. Post-training reinforces hallucinations

After pretraining, models are fine-tuned (RLHF, RLAIF, DPO, etc.) to align with human preferences. Intuitively, one might expect this to reduce hallucinations. However, hallucinations persist because of how evaluation benchmarks are designed:

- Most benchmarks use binary grading (correct = 1, wrong = 0).

- “I don’t know” (IDK) or abstentions are treated as wrong (0 points).

- This setup rewards guessing:

- A model that always guesses when unsure scores higher than a model that truthfully admits uncertainty.

- Analogy:

- Like students on multiple-choice exams — guessing improves test scores, even if it produces confident wrong answers.

- As a result, LLMs are trained and evaluated in a permanent “test-taking mode”, where bluffing is optimal.

2.3. Why hallucinations are not mysterious

- Hallucinations are not unique AI quirks, but simply statistical classification errors under uncertainty.

- They persist because current benchmarks misalign incentives:

- A hallucination can improve benchmark performance.

- An honest abstention reduces performance.

- Thus, even advanced post-training cannot solve hallucinations if the evaluation system keeps rewarding them.

3. Proposed Solution

Redesign evaluations to reward honesty

The main recommendation: adjust scoring in existing benchmarks rather than invent new hallucination-specific tests. Benchmarks should stop penalizing abstentions and instead give credit to uncertainty when appropriate.

Explicit confidence targets

Inspired by real-world exams (e.g., SAT, GRE, Indian JEE), introduce penalties for incorrect guesses and neutral credit for IDK. Example instruction added to each benchmark task: “Answer only if you are >75% confident. Correct answer: +1 point, Wrong guess: –2 points, IDK: 0 points. This ensures models learn when it’s better to abstain rather than guess.

Behavioral Calibration

Instead of only reporting probabilities, models should act in accordance with their confidence level. For example:

- If the model is <50% confident, it should output IDK.

- If highly confident, it should answer directly.

- This approach helps align model behavior with trustworthy communication, reducing overconfident hallucinations.

4. Bonus

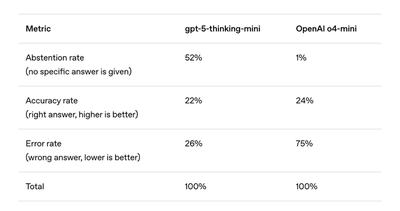

OpenAI claimed that ChatGPT also hallucinates. GPT‑5 has significantly fewer hallucinations especially when reasoning, but they still occur. In terms of accuracy, the older OpenAI o4-mini model performs slightly better. However, its error rate (i.e., rate of hallucination) is significantly higher. Strategically guessing when uncertain improves accuracy but increases errors and hallucinations.